At the Kapor Center, our signature three-summer educational program (SMASH Academy) aims to prepare underrepresented high school students of color to pursue careers in science, technology, education, and mathematics (STEM) and computing through access to courses, support networks, and opportunities for social and personal development.

In the nonprofit sector, evaluations can be driven by funder requirements, which often focus on outcomes. However, by solely focusing on outcomes, teams can lose sight of the goal of STEM evaluation: to inform programming (through the creation of process evaluation tools such as observation protocols and course evaluations) to ensure youth of color are prepared for the future STEM economy.

To keep that goal in focus, the Kapor Center ensures that the evaluation method driving its work is utilization-focused evaluation. Utilization-focused evaluation begins with the premise that the success metric of an evaluation is the extent to which it is used by key stakeholders (Patton, 2008). This framework requires joint decision making between the evaluator and stakeholders to determine the purpose of the evaluation, the kind of data to be collected, the type of evaluation design to be created, and the uses of the evaluation. Using this framework shifts evaluation from a linear, top-down approach to a feedback loop involving practitioners.

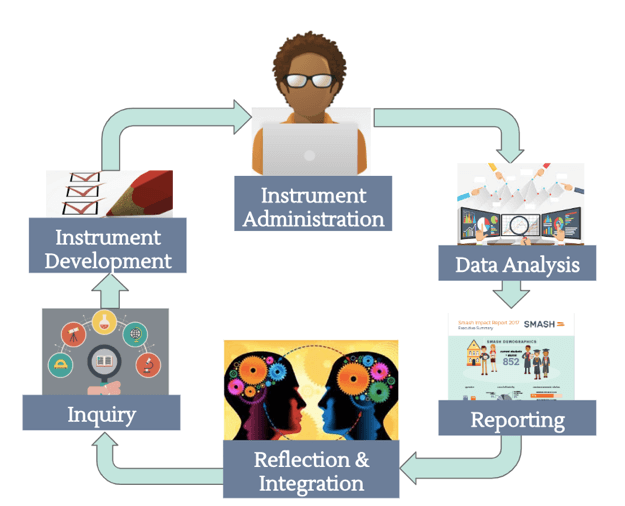

The evaluation cycle at the Kapor Center, a collaboration between our research team and SMASH’s program team, is outlined below:

- Inquiry: This stage begins with conversations with the stakeholders (e.g., programs and leadership teams) about common understandings of short-, medium-, and long-term outcomes as well as the key strategies that drive outcomes. Delineating outcomes has been integral to working transparently toward program priorities.

- Instrument Development: Once groups are in agreement about the goal of the evaluation and our path to it, we develop instruments. Instrument mapping, linking each tool and question to specific outcomes, has been a good practice to open the communication channels among teams.

- Instrument Administration: When working with seasonal staff at the helm of evaluation administration, documentation of processes has been crucial for fidelity. Not surprisingly, with varying levels of experience among program staff, the creation of systems to standardize data collection has been key, including scoring rubrics to be used during observations and guides for survey administration.

Data Analysis and Reporting: When synthesizing data, analyses and reporting need to not only tell a broad impact story but also provide concrete targets and priorities for the program

- In this regard, analyses have encompassed pre-post outcome differences and reports on program experiences.

- Reflection and Integration: At the end of the program cycle, the program team reflects on the data together to inform their path forward. In such a meeting, the team engages in answering three questions: 1) What did you observe about the data? 2) What can you infer about the data and what evidence supports your inference? and 3) What are the next steps to develop and prioritize program modifications?

Developing stronger research-practice ties have been integral to the Kapor Center’s understanding of what works, for whom, and under what context to ensure more youth of color pursue and persist in STEM fields. Beyond the SMASH program, the practice of collective cooperation between researchers and practitioners provides an opportunity to impact strategies across the field.

References

Patton, M. Q. (2008). Utilization-focused evaluation. Newbury Park, CA: Sage.

Except where noted, all content on this website is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.