The Formative Assessment Systems for ATE project (FAS4ATE) focuses on assessment practices that serve the ongoing evaluation needs of projects and centers. Determining these information needs and organizing data collection activities is a complex and demanding task, and we’ve used logic models as a way to map them out. Over the next five weeks, we offer a series of blog posts that provide examples and suggestions of how you can make formative assessment part of your ATE efforts. – Arlen Gullickson, PI, FAS4ATE

Week 3 – Why am I not seeing the results I expected?

Using a logic model helps you to see the connections between the parts of your project. Once you have a clear connection set up, another critical consideration is dosage. Dosage is how much of an intervention (activities like training, outputs like curriculum, etc.) is delivered to the target audience. Understanding dosage is critical to understanding the size of outcomes you can reasonably expect to see as a result of your efforts. As a program developer, it is essential that you know how much your participants need to engage with your intervention to achieve the desired impact.

Think about dosage in relation to medicine. If you have a mild bacterial infection, a doctor will prescribe a specific antibiotic and a specific dosage. Assuming you take all your pills, you should recover. If you don’t feel better, it may be because the bacteria were stronger than the antibiotic. The doctor will prescribe a different and probably stronger dose of antibiotic to ensure you get better. So the dosage is directly related to the desired outcome.

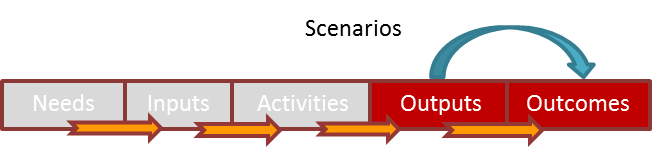

In a program, the same is true: The dose needs to match the desired size of change. Consider the New Media Enabled Technician ATE project, which Joyce Malyn-Smith from EDC discussed in our first FAS4ATE webinar. They wanted to improve what students know and are able to do with social media to market their small businesses (outcome). The EDC team planned to create social media scenarios and grading rubrics for community college faculty to use in their existing classes. Scenarios and rubrics (outputs) were the initial, intended dose.

However, preliminary discussions with potential faculty participants showed the majority of them had limited social media experience. They wouldn’t be able to use the scenarios as a free-standing intervention, because they weren’t familiar with the subject matter. Thus, the dosage would not be enough to get the desired result, because of the characteristics of the intended implementers

.

.

So Joyce’s team changed the dosage by creating scenario-driven class projects with detailed instructional resources for the faculty. This adaptation, suited to their target faculty, enabled them to get closer to the desired level of project outcomes.

So as we develop programs from great ideas, we need to think about dosage. How much of that great idea do we need to convey to ensure we get the outcomes we’re looking for? How much do our participants need in terms of engagement or materials to achieve the desired results? The logic model can direct our formative assessment activities to help us discover places where our dosage is not quite right. Then, we can make a change early in the life of the project, like Joyce’s team did with the community college faculty, to ensure we’ve got the correct amount of intervention needed.

Except where noted, all content on this website is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.