No plan of action survives first contact with the enemy – Helmuth van Moltke (paraphrased)

Evaluations are complicated examinations of complex phenomena. It is optimistic to assume that the details of an evaluation won’t change, particularly for a multiyear project. So how can evaluators deal with the inevitable changes? I propose that purposeful documentation of evaluations can help. In this blog, I focus on the distinctions among three types of documents—the contract, scope of work, and study protocol—each serving a specific purpose.

- The contract codifies legal commitments between the evaluator and client. Contracts inevitably outline the price of the work, period of the agreement, and specifics like payment terms. They are hard to change after execution, and institutional clients often insist on using their own terms. Given this, while it is possible to revise a contract, it is impractical to use the contract to manage and document changes in the evaluation. I advocate including operational details in a separate “scope of work” (SOW) document, which can be external or appended to the contract.

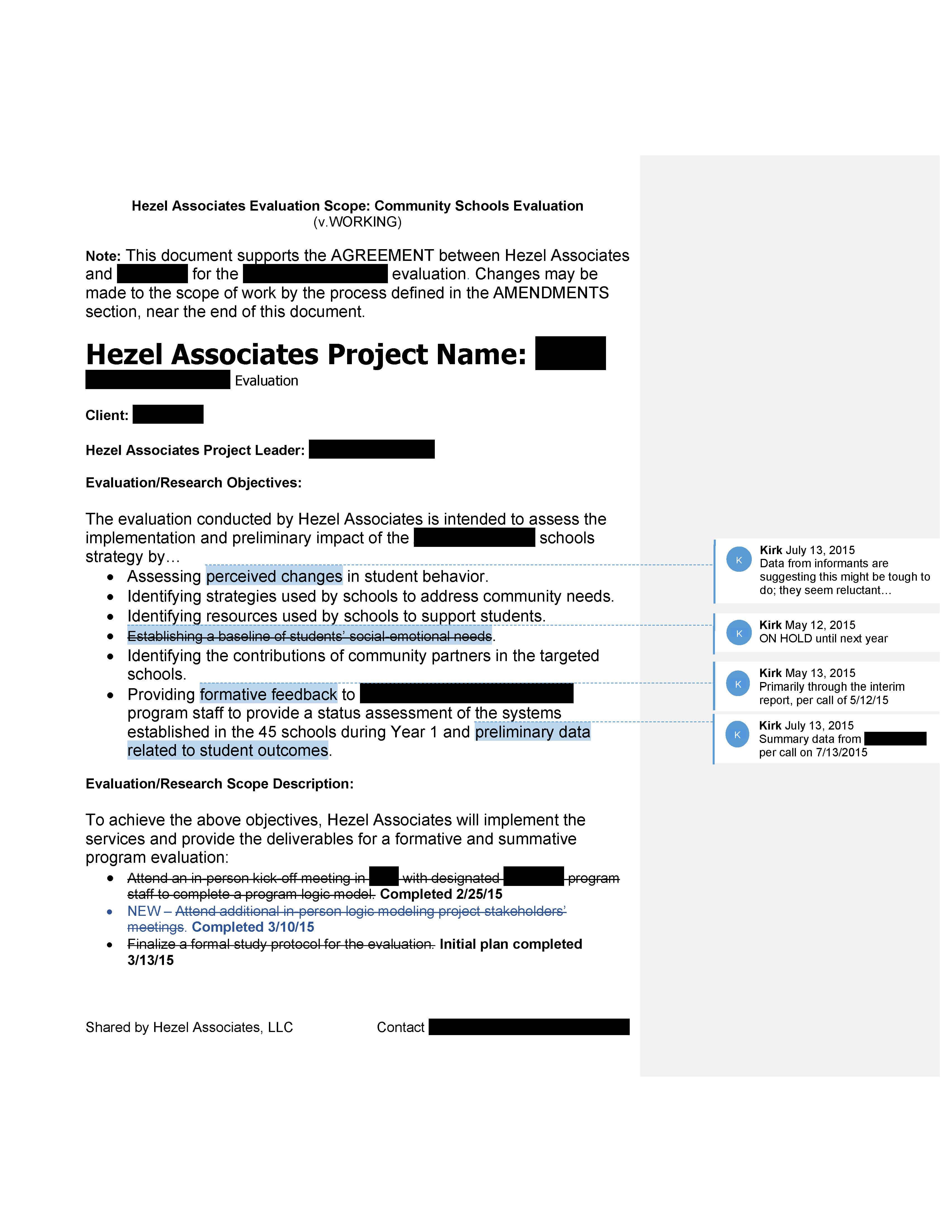

- The scope of the work translates the contract into an operational business relationship, listing the responsibilities of both the evaluator and client, tasks, deliverables, and timeline in detail sufficient for effective management of quality and cost. Because the scope of an evaluation will almost certainly change (timelines seem to be the first casualty), it is necessary to establish a process to document “change orders”—detailing revisions to SOW details, who proposed (by either party), who accepted—to avoid conflict. If a change to the scope does not affect the price of the work, it may be possible to manage and record changes without having to revisit the contract. I encourage evaluators to maintain “working copies” of the SOW, with changes, dates, and details of approval communications from clients. At Hezel Associates, practice is to share iterations of the SOW with the client when the work changes, with version dates to document the evaluation-as-implemented so everyone has the same picture of the work.

- The study protocol then goes further, defining technical aspects of the research central to the work being performed. A complex evaluation project might require more than one protocol (e.g., for formative feedback and impact analysis), each being similar in concept to the Methods section of a thesis or dissertation. A protocol details questions to be answered, the study design, data needs, populations, data collection strategies and instrumentation, and plans for analyses and reporting. A protocol frames processes to establish and maintain appropriate levels of study rigor, builds consensus among team members, and translates evaluation questions into data needs and instrumentation to assure collection of required data before it is too late. Technical aspects of the evaluation are central to the quality of the work but likely to be mostly opaque to the client. I argue that it is crucial that such changes be formally documented in the protocol, but I suggest maintaining such technical information as internal documents for the evaluation team—unless a given change impacts the SOW, at which point the scope must be formally revised as well.

Each of these types of documentation serves an entirely different function as part of what might be called an “evaluation plan,” and all are important to a successful, high-quality project. Any part may be combined with others in a single file, transmitted to the client as part of a “kit,” maintained separately, or perhaps not shared with the client at all. Regardless, our experience has been that effective documentation will help avoid confusion after marching onto the evaluation field of battle.

Except where noted, all content on this website is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.